HPC System Utilization

User projects

From time to time RRZE or now NHR@FAU asks its HPC customers to provide a short report of the work they are doing on the HPC systems. You can find an extensive list of ongoing and past user projects on our User Project Page.

HPC users and usage

RRZE operates big HPC systems (LiMa – shutdown end of 2018, Emmy – shutdown in 09/2022, Meggie, Fritz&Alex – since 2022), a throughput cluster (Woody) and specialized systems (TinyFAT, TinyGPU, Windows HPC – shutdown in June 2018). As of Q4/2022, there are in total are more than 2’000 nodes with more than 90’000 cores and almost 400 TB of (distributed) main memory as well as more than 10 PB of disk storage in five storage systems plus a transparently managed tape library for offline-storage.

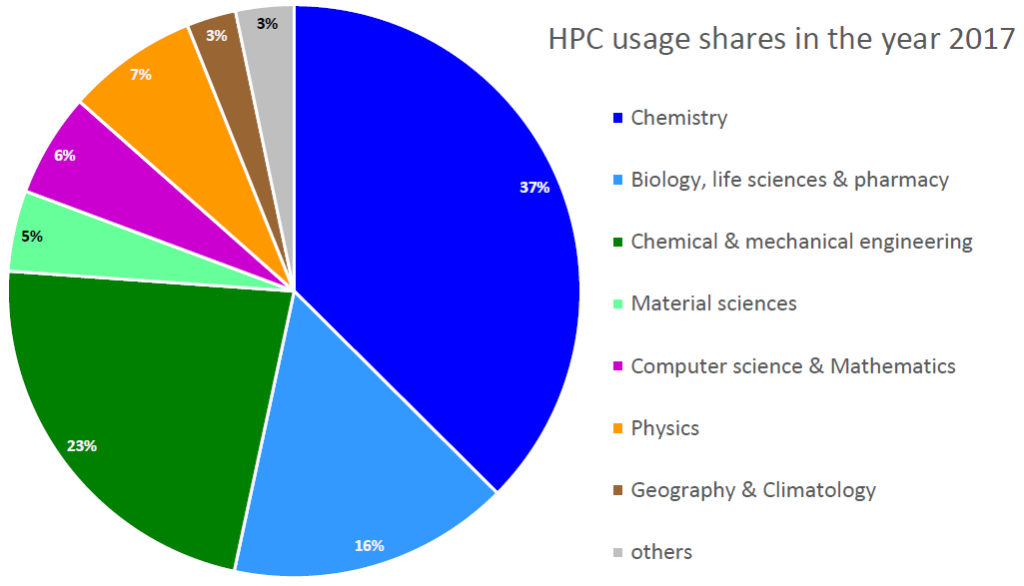

Highlights of 2017

In the year 2017 more than 550 accounts from almost 70 groups have been active (i.e. consumed CPU cycles) on RRZE’s HPC systems. This includes scientists from all five faculties of the University, students doing labs as part of their studies or for their final bachelor or master thesis, as well as a few users from regional universities and colleges or external collaborators. In total about 200 million core hours have been delivered to 1.7 million jobs.

Highlights of 2018

The year 2018 was marked by a significant extension of storage capacity through a “shareholder NFS server”. TinyGPU has been extended by several user groups to include up-to-date (consumer) GPUs. But we also had to shutdown two systems (LiMa and Windows HPC). Both were up and running for more than eight years. Over the years, LiMa served more than one thousand users and delivered almost 300 million core hours to 2.6 million jobs.

In the year 2018 almost 600 accounts from more than 70 groups have been active (i.e. consumed CPU cycles) on RRZE’s HPC systems. This includes scientists from all five faculties of the University, students doing labs as part of their studies or for their final bachelor or master thesis, as well as a few users from regional universities and colleges or external collaborators. In total more than 200 million core hours have been delivered to almost 1.6 million jobs.

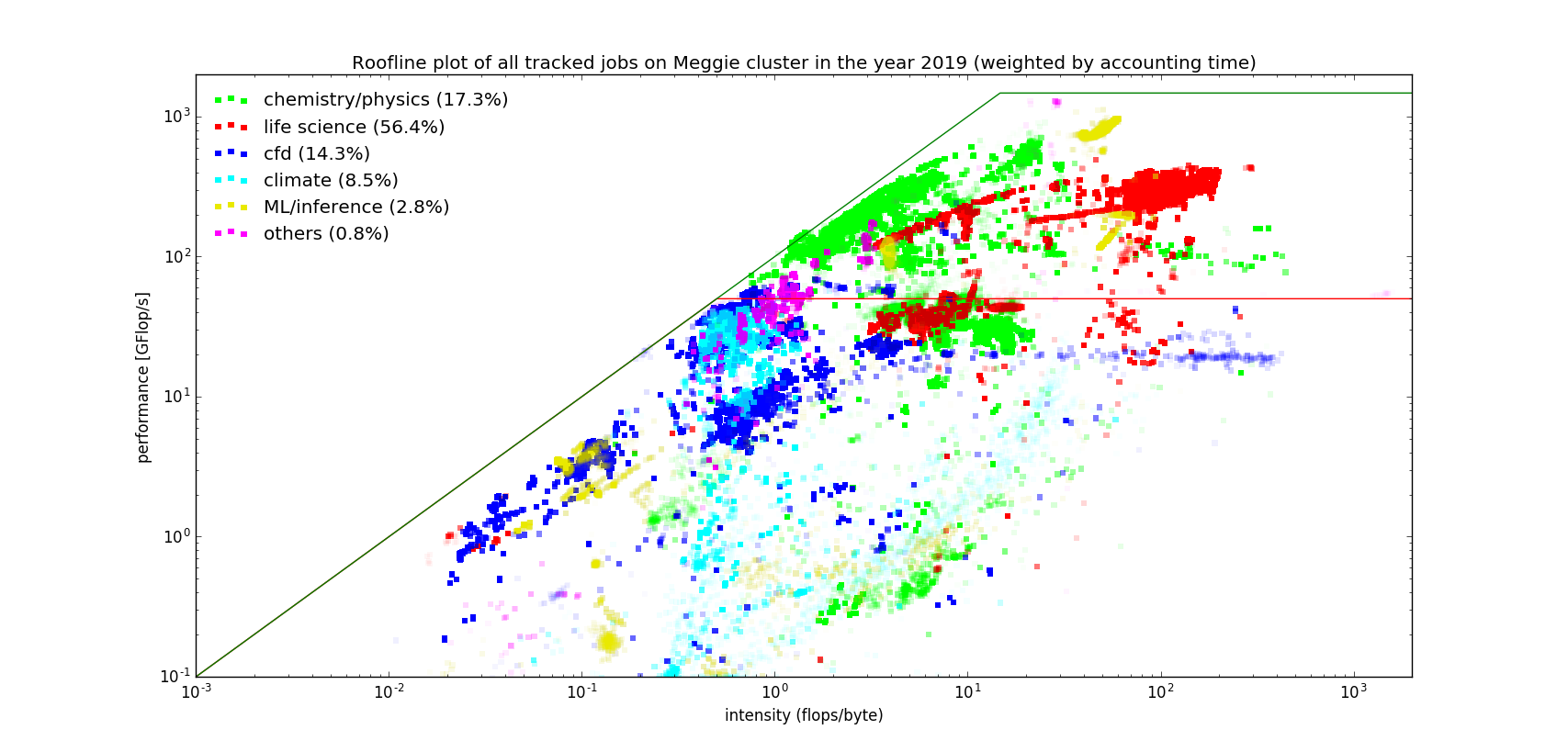

Highlights of 2019

In the year 2019 more about 530 accounts from 70 groups have been active (i.e. consumed CPU cycles) on RRZE’s HPC systems. This includes again scientists from all five faculties of the University, students doing labs as part of their studies or for their final bachelor or master thesis, as well as a few users from regional universities and colleges or external collaborators. In total about 215 million core hours have been delivered to more than 1.2 million jobs.

Only “minor” hardware extensions occurred during the year 2019: 12 additional nodes with 48 NVidia RZX2080Ti GPUs for TinyGPU (+51%; all financed by individual user groups), yet an other “shareholder NFS server” (+450 TB), and finally an addition of 112 nodes to the Woody throughput cluster (+64%) financed by the ending excellence cluster EAM and an upcoming CRC. The grand proposal for FAU’s next big parallel cluster (4.5 mio EUR) is under review by DFG and we hope to get the approval in early 2020.

Highlights of 2020

In the year 2020 our DFG application was approved and we succeeded with our NHR application. The tender for a big procurement (8 Mio EUR for a parallel computer and GPGPU cluster) was started in autumn. Several groups again financed significant extensions of TinyGPU and TinyFAT. These new nodes also mark the transition from Ubuntu 18.04 to Ubuntu 20.04 and from Torque/maui to Slurm as batch system. Moreover, the HPC storage system for $HOME and $VAULT has been replaced during summer 2020 – significantly increasing the online disk capacity to several PetaBytes.

The number of active accounts and groups further increased to 738 and 83, respectively. This includes again scientists from all five faculties of the University, students doing labs as part of their studies or for their final bachelor or master thesis, as well as a few users from regional universities and colleges or external collaborators. In total about 213 million core hours have been delivered to more than 1.6 million jobs.

Highlights of 2021

Our work in the year 2021 was dominated with the transition of the HPC services from RRZE to NHR@FAU. The newly established Erlangen National Center for High Performance Computing (NHR@FAU) is now not only in charge of the national services but also for all basic Tier3 HPC services of FAU. Dedicated financial resources are available for the basic Tier3 services; however, we exploit as much synergies in purchasing and operating the hardware as well as user services. Thus, from the outside NHR@FAU looks like one unit despite the different financing sources and duties. Besides this smooth transition in the background, also the procurement of the new NHR/Tier3 systems Alex (GPGPU cluster) + Fritz (parallel computer) has been an all year long task. It originally was planned to get Alex + Fritz operational in Q3/2021. However, due the world wide shortage not only of IT equipment but also many other supply, only Alex could be brought into early-access mode by the end of 2021.

The number of active accounts and groups was on a similar level as in the past years. NHR@FAU also could welcome first national users. Luckily, also the staff of NHR@FAU could be increased by several people.

Preliminary highlights of 2022

Alex + Fritz became fully operational and, both, have already been extended by additional nodes. The peak power consumption of all HPC systems on some days exceeds 1 MW. On Fritz we sometimes see in regular production more than 1 PFlop/ sustained performance.

NHR is a major game changer with already 12 large-scale projects, 30 normal projects, and 13 test/porting projects from all over Germany.

Research projects associated with any of the HPC clusters except Alex+Fritz (items occur multiple times if a field is mentioned for multiple clusters) – extracted from FAU’s CRIS database

- A stochastic estimate of sea level contribution from glaciers and ice caps using satellite remote sensing

- Tapping the potential of Earth Observations

- International Doctoral Program: Measuring and Modelling Mountain glaciers and ice caps in a Changing Climate (M³OCCA)

- Measurements of height and mass changes of glaciers and ice caps outside the large ice sheets using TanDEM-X

- Deep-Learning-Informed Glacio-Hydrological Threat

- Study of the dynamic state of ice shelves on the south-western Antarctic Peninsula by an interdisciplinary approach of remote sensing, fracture mechanics and modelling of ice dynamics

- A stochastic estimate of sea level contribution from glaciers and ice caps using satellite remote sensing

- Climate sensitivity of ice shelves on the western Antarctic Peninsula

- Video-based Re-Identification for Animals

- International Doctoral Program: Measuring and Modelling Mountain glaciers and ice caps in a Changing Climate (M³OCCA)

- Measurements of height and mass changes of glaciers and ice caps outside the large ice sheets using TanDEM-X

- Ice thickness, remote sensing and sensitivity experiments using an ice dynamic model for main outlet glaciers of the southern Patagonian icefield

- Development of an interactive platform for processing and distribution of data sets with glaciological variables based on Sentinel-1 data (for glaciers and ice caps outside the large ice sheets)

- Tapping the potential of Earth Observations

- Deep-Learning-Informed Glacio-Hydrological Threat

- Teilprojekt P12 - Postdoctoral Project: Quantum-to-Continuum Model of Thermoset Fracture

- Video-based Re-Identification for Animals

- International Doctoral Program: Measuring and Modelling Mountain glaciers and ice caps in a Changing Climate (M³OCCA)

- Characterization of molecular diffusion in liquids with dissolved gases

- Tapping the potential of Earth Observations

- Metaprogrammierung für Beschleunigerarchitekturen

- Mesoscopic simulation of the selective beam melting process

- Pre-standardisation of incremental FIB micro-milling for intrinsic stress evaluation at the sub-micron scale

- International Doctoral Program: Measuring and Modelling Mountain glaciers and ice caps in a Changing Climate (M³OCCA)

- Characterization of molecular diffusion in liquids with dissolved gases

- Substrate impact on flow induced particle motion in laminar shear flow

- Numerical modeling of local material properties and thereof derived process strategies for powder bed based additive manufacturing of bulk metallic glasses (T2)

- Accurate determination of binary gas diffusion coefficients by using laser-optical measurement methods and molecular dynamics simulations

- Cooperative Action of SNARE Peptides in Fusion

- Modellgestützte Auswertung von Diffusionsexperimenten an binären Gasgemischen in einer Loschmidt-Zelle

- Dispersion Effects on Reactivity and Chemo-, Regio- and Stereoselectivity in Organocatalysed Domino Reactions: A Joint Experimental and Theoretical Study

- Teilprojekt P12 - Postdoctoral Project: Quantum-to-Continuum Model of Thermoset Fracture

- Schädigungsmechanismen und mikrostrukturelle Einflussgrößen auf die Ermüdungslebensdauer metallischer Werkstoffe im VHCF-Bereich

- The role of SNARE TMDs during exocytosis of secretory granules

- GRK 1962: Dynamische Wechselwirkungen an Biologischen Membranen – von Einzelmolekülen zum Gewebe

- Characterization of molecular diffusion in liquids with dissolved gases

- Selbstadaption für zeitschrittbasierte Simulationstechniken auf heterogenen HPC-Systemen

- Ultra-Skalierbare Multiphysiksimulationen für Erstarrungsprozesse in Metallen

- Tapping the potential of Earth Observations

- Influence of Topological Anisotropy on the Mechanical Properties of Silicate Glasses

- Thermophysical Properties of Long-Chained Hydrocarbons, Alcohols, and their Mixtures with Dissolved Gases

- Theory

- International Doctoral Program: Measuring and Modelling Mountain glaciers and ice caps in a Changing Climate (M³OCCA)

- Process-Oriented Performance Engineering Service Infrastructure for Scientific Software at German HPC Centers

- Large Scale Simulations on Carbon Allotropes (C01)

- ESSEX - Equipping Sparse Solvers for Exascale

- Cooperative Action of SNARE Peptides in Fusion

- Fractures across Scales: Integrating Mechanics, Materials Science, Mathematics, Chemistry, and Physics/ Skalenübergreifende Bruchvorgänge: Integration von Mechanik, Materialwissenschaften, Mathematik, Chemie und Physik

- Equipping Sparse Solvers for Exascale II (ESSEX-II)

- ExaStencils - Advanced Stencil-Code Engineering

- Detection and Attribution of climate change for the mountain cryosphere: Advancing to the process-level

- Dispersion Effects on Reactivity and Chemo-, Regio- and Stereoselectivity in Organocatalysed Domino Reactions: A Joint Experimental and Theoretical Study

- TERRA-NEO - Integrated Co-Design of an Exascale Earth Mantle Modeling Framework

- GRK 1962: Dynamische Wechselwirkungen an Biologischen Membranen – von Einzelmolekülen zum Gewebe

- EXASTEEL II - Bridging Scales for Multiphase Steels

- Plastic deformation, crack nucleation and fracture in lightweight intermetallic composite materials

- Privacy-preserving analysis of distributed medical data

- Selbstadaption für zeitschrittbasierte Simulationstechniken auf heterogenen HPC-Systemen

- Quantenchemische Untersuchungen zu Bildung, Struktur, Energie und elektronischen Eigenschaften von Carbinen, Fullerenen und Graphenen (C02)

- Energy Oriented Center of Excellence: toward exascale for energy (Performance evaluation, modelling and optimization)

- Tapping the potential of Earth Observations

- Metaprogrammierung für Beschleunigerarchitekturen

- Deep-Learning-Informed Glacio-Hydrological Threat

Research projects associated with the Tier/NHR systems Alex+Fritz (items occur multiple times if a field is mentioned for multiple clusters) – extracted from FAU’s CRIS database

- Medical Image Analysis with Normative Machine Learning

- DatenREduktion für Exascale- Anwendungen in der Fusionsforschung

- International Doctoral Program: Measuring and Modelling Mountain glaciers and ice caps in a Changing Climate (M³OCCA)

- Der skalierbare Strömungsraum

- Fracture across Scales: Integrating Mechanics, Materials Science, Mathematics, Chemistry, and Physics (FRASCAL)

- Tapping the potential of Earth Observations

- DatenREduktion für Exascale- Anwendungen in der Fusionsforschung

- International Doctoral Program: Measuring and Modelling Mountain glaciers and ice caps in a Changing Climate (M³OCCA)

- Der skalierbare Strömungsraum

- Fracture across Scales: Integrating Mechanics, Materials Science, Mathematics, Chemistry, and Physics (FRASCAL)

- Tapping the potential of Earth Observations

Publications associated with any of the HPC clusters except Alex+Fritz (items occur multiple times if a publication is liked to multiple clusters) – extracted from FAU’s CRIS database

- , , , :

Mass changes of the northern Antarctic Peninsula Ice Sheet derived from repeat bi-static synthetic aperture radar acquisitions for the period 2013–2017

In: Cryosphere 17 (2023), p. 4629-4644

ISSN: 1994-0416

DOI: 10.5194/tc-17-4629-2023 - , , :

Error-tolerant quantum convolutional neural networks for symmetry-protected topological phases

In: Physical Review Research 6 (2024), Article No.: 033111

ISSN: 2643-1564

DOI: 10.1103/PhysRevResearch.6.033111 - , , , , , , , , , , :

Superlattice approach to doping infinite-layer nickelates

In: Physical Review B 104 (2021)

ISSN: 0163-1829

DOI: 10.1103/PhysRevB.104.165137 - , , , , , , , :

Large-scale simulations of Floquet physics on near-term quantum computers

In: npj Quantum Information 10 (2024), Article No.: 84

ISSN: 2056-6387

DOI: 10.1038/s41534-024-00866-1

URL: https://arxiv.org/abs/2303.02209 - , , , , , , , , , , , , , , :

Classification of rheumatoid arthritis from hand motion capture data using machine learning

13. Kongress der Deutschen Gesellschaft für Biomechanik (DGfB) (Heidelberg, 2024-04-24 - 2024-04-26) - , :

Towards quantum gravity with neural networks: solving quantum Hamilton constraints of 3d Euclidean gravity in the weak coupling limit

In: Classical and Quantum Gravity 41 (2024), Article No.: 215006

ISSN: 0264-9381

DOI: 10.1088/1361-6382/ad7c14 - , :

Towards quantum gravity with neural networks: solving the quantum Hamilton constraint of U(1) BF theory

In: Classical and Quantum Gravity 41 (2024), Article No.: 225014

ISSN: 0264-9381

DOI: 10.1088/1361-6382/ad84af - , , , , :

Variational Hamiltonian simulation for translational invariant systems via classical pre-processing

In: Quantum Science and Technology 8 (2023), Article No.: 025006

ISSN: 2058-9565

DOI: 10.1088/2058-9565/acb1d0 - , , , , , , , , , , :

Distributed Global Debris Thickness Estimates Reveal Debris Significantly Impacts Glacier Mass Balance

In: Geophysical Research Letters 48 (2021), Article No.: e2020GL091311

ISSN: 0094-8276

DOI: 10.1029/2020GL091311 - , , , , :

Global glacier surface elevation change and geodetic mass balance estimations

13th European Conference on Synthetic Aperture Radar, EUSAR 2021 (Virtual, 2021-03-29 - 2021-04-01)

In: Proceedings of the European Conference on Synthetic Aperture Radar, EUSAR 2021

- , :

Towards quantum gravity with neural networks: solving quantum Hamilton constraints of 3d Euclidean gravity in the weak coupling limit

In: Classical and Quantum Gravity 41 (2024), Article No.: 215006

ISSN: 0264-9381

DOI: 10.1088/1361-6382/ad7c14 - , , , , , :

Facile Access to Challenging ortho-Terphenyls via Merging Two Multi-Step Domino Reactions in One-Pot: A Joint Experimental/Theoretical Study

In: ChemCatChem (2019)

ISSN: 1867-3880

DOI: 10.1002/cctc.201900746 - , , , , , :

Physical mechanisms encoded in photoionization yield from IR+XUV setups

In: European Physical Journal D 73 (2019), Article No.: 212

ISSN: 1434-6060

DOI: 10.1140/epjd/e2019-90507-4 - , , , , , , :

Free Energy Landscape of Colloidal Clusters in Spherical Confinement

In: Acs Nano (2019)

ISSN: 1936-0851

DOI: 10.1021/acsnano.9b03039 - , , , , :

Fiducial marker recovery and detection from severely truncated data in navigation assisted spine surgery

In: Medical Physics (2022)

ISSN: 0094-2405

DOI: 10.1002/mp.15617 - , , , , :

Trajectory Optimization of a 3D Musculoskeletal Model with Inertial Sensors

XXVIII Congress of the International Society of Biomechanics (ISB) (Online, 2021-07-25 - 2021-07-29)

URL: https://www.mad.tf.fau.de/files/2021/07/nitschke_isb_2021_abstract.pdf - , :

Efficient equilibration of hard spheres with Newtonian event chains

In: Journal of Chemical Physics 150 (2019), Article No.: 174108

ISSN: 0021-9606

DOI: 10.1063/1.5090882 - , , , , :

Unexpected dipole instabilities in small molecules after ultrafast XUV irradiation

In: Physical Review A 107 (2023), Article No.: L020801

ISSN: 1050-2947

DOI: 10.1103/PhysRevA.107.L020801 - , :

Computing Feasible Points of Bilevel Problems with a Penalty Alternating Direction Method

In: Informs Journal on Computing (2020)

ISSN: 1091-9856

DOI: 10.1287/ijoc.2019.0945

URL: https://pubsonline.informs.org/doi/10.1287/ijoc.2019.0945 - , , , , :

Computing optimality certificates for convex mixed-integer nonlinear problems

(2021)

URL: https://opus4.kobv.de/opus4-trr154/frontdoor/index/index/docId/476/ - , , , , :

Change the direction: 3D optimal control simulation by directly tracking marker and ground reaction force data

In: PeerJ (2023)

ISSN: 2167-8359

DOI: 10.7717/peerj.14852

URL: https://peerj.com/articles/14852/ - , , , , , , , :

Electrocatalytic Energy Release of Norbornadiene-Based Molecular Solar Thermal Systems: Tuning the Electrochemical Stability by Molecular Design

In: Chemsuschem (2022)

ISSN: 1864-5631

DOI: 10.1002/cssc.202201483 - , , , :

Investigation of iron oxide nanoparticle formation in a spray-flame synthesis process using laser-induced incandescence

In: Applied Physics B-Lasers and Optics 130 (2024), Article No.: 199

ISSN: 0946-2171

DOI: 10.1007/s00340-024-08334-6 - , , :

Exploiting complete linear descriptions for decentralized power market problems with integralities

In: Mathematical Methods of Operations Research (2022)

ISSN: 1432-2994

DOI: 10.1007/s00186-022-00775-z

URL: https://opus4.kobv.de/opus4-trr154/frontdoor/index/index/docId/279 - , , , :

Modeling laser beam absorption of metal alloys at high temperatures for selective laser melting

In: Advanced Engineering Materials 23 (2021), Article No.: 2100137

ISSN: 1438-1656

DOI: 10.1002/adem.202100137 - , , , , , , , :

Adaptive Region Selection for Active Learning in Whole Slide Image Semantic Segmentation

In: The 26th International Conference on Medical Image Computing and Computer Assisted Intervention (MICCAI 2023) 2023

DOI: 10.48550/arXiv.2307.07168 - , , , :

An average stochastic approach to two-body dissipation in finite fermion systems

In: Annals of Physics 406 (2019), p. 233-256

ISSN: 0003-4916

DOI: 10.1016/j.aop.2018.10.009 - , , , , :

Quantum Dissipative Dynamics (QDD): A real-time real-space approach to far-off-equilibrium dynamics in finite electron systems

In: Computer Physics Communications 270 (2022)

ISSN: 0010-4655

DOI: 10.1016/j.cpc.2021.108155 - , , , , , , , :

Large-scale simulations of Floquet physics on near-term quantum computers

In: npj Quantum Information 10 (2024), Article No.: 84

ISSN: 2056-6387

DOI: 10.1038/s41534-024-00866-1

URL: https://arxiv.org/abs/2303.02209 - , , , , , , , :

Achieving Highly Durable Random Alloy Nanocatalysts through Intermetallic Cores

In: ACS nano 13 (2019), p. 4008-4017

ISSN: 1936-0851

DOI: 10.1021/acsnano.8b08007 - , , , , , , :

Effect of lattice mismatch and shell thickness on strain in core@shell nanocrystals

In: Nanoscale Advances (2020)

ISSN: 2516-0230

DOI: 10.1039/D0NA00061B - , , , , , , , , , , , , , :

Surface Chemistry of the Molecular Solar Thermal Energy Storage System 2,3-Dicyano-Norbornadiene/Quadricyclane on Ni(111)

In: ChemPhysChem (2022)

ISSN: 1439-4235

DOI: 10.1002/cphc.202200199 - , , , , , , :

Revealing bulk metallic glass crystallization kinetics during laser powder bed fusion by a combination of experimental and numerical methods

In: Journal of Non-Crystalline Solids 619 (2023), Article No.: 122532

ISSN: 0022-3093

DOI: 10.1016/j.jnoncrysol.2023.122532 - , , :

Comprehensive numerical investigation of laser powder bed fusion process conditions for bulk metallic glasses

In: Additive Manufacturing 81 (2024), Article No.: 104026

ISSN: 2214-7810

DOI: 10.1016/j.addma.2024.104026 - , , , :

Solving AC Optimal Power Flow with Discrete Decisions to Global Optimality

In: Informs Journal on Computing (2023)

ISSN: 1091-9856

DOI: 10.1287/ijoc.2023.1270

URL: https://opus4.kobv.de/opus4-trr154/frontdoor/index/index/docId/323 - , :

Self-interaction-correction and electron removal energies

In: Theoretical Chemistry Accounts 140 (2021)

ISSN: 1432-881X

DOI: 10.1007/s00214-021-02753-w - , , :

A Gradient-Based Method for Joint Chance-Constrained Optimization with Continuous Distributions

(2025)

URL: https://opus4.kobv.de/opus4-trr154/frontdoor/index/index/docId/532 - , , , , , , , , , , , , , :

Two Rings Around One Ball: Stability and Charge Localization of [1 : 1] and [2 : 1] Complex Ions of [10]CPP and C60/70[*]

In: Chemistry - A European Journal 29 (2022), Article No.: e202203734

ISSN: 0947-6539

DOI: 10.1002/chem.202203734 - , :

Towards quantum gravity with neural networks: solving the quantum Hamilton constraint of U(1) BF theory

In: Classical and Quantum Gravity 41 (2024), Article No.: 225014

ISSN: 0264-9381

DOI: 10.1088/1361-6382/ad84af - , , , , :

Variational Hamiltonian simulation for translational invariant systems via classical pre-processing

In: Quantum Science and Technology 8 (2023), Article No.: 025006

ISSN: 2058-9565

DOI: 10.1088/2058-9565/acb1d0 - , , :

Global time series and temporal mosaics of glacier surface velocities derived from Sentinel-1 data

In: Earth System Science Data 13 (2021), p. 4653-4675

ISSN: 1866-3508

DOI: 10.5194/essd-13-4653-2021 - , , , , , , , :

Data-driven Distributionally Robust Optimization over Time

In: INFORMS Journal on Optimization (2023)

ISSN: 2575-1484

DOI: 10.1287/ijoo.2023.0091

URL: https://opus4.kobv.de/opus4-trr154/frontdoor/index/index/docId/496 - , , , :

Self-diffusion coefficient and viscosity of methane and carbon dioxide via molecular dynamics simulations based on new ab initio-derived force fields

In: Fluid Phase Equilibria 481 (2019), p. 15-27

ISSN: 0378-3812

DOI: 10.1016/j.fluid.2018.10.011 - , , , , :

Fick diffusion coefficients of binary fluid mixtures consisting of methane, carbon dioxide, and propane via molecular dynamics simulations based on simplified pair-specific ab initio-derived force fields

In: Fluid Phase Equilibria 502 (2019), Article No.: 112257

ISSN: 0378-3812

DOI: 10.1016/j.fluid.2019.112257 - , , , , , , :

Prediction of the effect of stack height on running biomechanics using optimal control simulation

Footwear Biomechanics Symposium (FBS) 2023 (Osaka, Japan, 2023-07-26 - 2023-07-28)

In: Footwear Science 2023

DOI: 10.1080/19424280.2023.2199295 - , , , :

Highly efficient encoding for job-shop scheduling problems and its application on quantum computers

In: Quantum Science and Technology 10 (2024), p. 015051

ISSN: 2058-9565

DOI: 10.1088/2058-9565/ad9cba - , , , :

Enhancement of the predictive power of molecular dynamics simulations for the determination of self-diffusion coefficient and viscosity demonstrated for propane

In: Fluid Phase Equilibria 496 (2019), p. 69-79

ISSN: 0378-3812

DOI: 10.1016/j.fluid.2019.05.019 - , , , :

Robust Approximation of Chance Constrained DC Optimal Power Flow under Decision-Dependent Uncertainty

In: European Journal of Operational Research (2022)

ISSN: 0377-2217

DOI: 10.1016/j.ejor.2021.10.051

URL: https://opus4.kobv.de/opus4-trr154/frontdoor/index/index/docId/312 - , , , , :

Far Off Equilibrium Dynamics in Clusters and Molecules

In: Frontiers in Physics 8 (2020), Article No.: 27

ISSN: 2296-424X

DOI: 10.3389/fphy.2020.00027 - , , , :

How many sensors are enough? Trajectory optimization using sparse inertial sensor sets

9th World Congress of Biomechanics 2022 Taipei (Taipei, 2022-07-10 - 2022-07-14)

URL: https://www.youtube.com/watch?v=TznVCgK4DF0 - , , , , , , , , , , , , , , , , :

Adjusting the energy of interfacial states in organic photovoltaics for maximum efficiency

In: Nature Communications 12 (2021), Article No.: 1772

ISSN: 2041-1723

DOI: 10.1038/s41467-021-22032-3 - , :

Particle Shape Control via Etching of Core@Shell Nanocrystals

In: ACS nano 12 (2018), p. 9186-9195

ISSN: 1936-0851

DOI: 10.1021/acsnano.8b03759 - , , , :

Complex Crystals from Size-Disperse Spheres

In: Physical Review Letters 122 (2019)

ISSN: 0031-9007

DOI: 10.1103/PhysRevLett.122.128005 - , , :

The impact of dissipation on plasmonic versus non-collective excitation

In: Physics of Plasmas 25 (2018), Article No.: 031905

ISSN: 1070-664X

DOI: 10.1063/1.5018404 - , , , , , , , , , :

Triggering the energy release in molecular solar thermal systems: Norbornadiene-functionalized trioxatriangulen on Au(111)

In: Nano Energy 95 (2022), Article No.: 107007

ISSN: 2211-2855

DOI: 10.1016/j.nanoen.2022.107007 - , , , , , , , , , , :

Distributed Global Debris Thickness Estimates Reveal Debris Significantly Impacts Glacier Mass Balance

In: Geophysical Research Letters 48 (2021), Article No.: e2020GL091311

ISSN: 0094-8276

DOI: 10.1029/2020GL091311 - , , :

Predictive simulation of bulk metallic glass crystallization during laser powder bed fusion

In: Additive Manufacturing 59 (2022), Article No.: 103121

ISSN: 2214-7810

DOI: 10.1016/j.addma.2022.103121 - , , , , :

Global glacier surface elevation change and geodetic mass balance estimations

13th European Conference on Synthetic Aperture Radar, EUSAR 2021 (Virtual, 2021-03-29 - 2021-04-01)

In: Proceedings of the European Conference on Synthetic Aperture Radar, EUSAR 2021 - , , :

Information Content of the Parity-Violating Asymmetry in P-208

In: Physical Review Letters 127 (2021)

ISSN: 0031-9007

DOI: 10.1103/PhysRevLett.127.232501 - , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , :

Observing glacier elevation changes from spaceborne optical and radar sensors - an inter-comparison experiment using ASTER and TanDEM-X data

In: Cryosphere 18 (2024), p. 3195-3230

ISSN: 1994-0416

DOI: 10.5194/tc-18-3195-2024 - , , , , , :

Elucidating activating and deactivating effects of carboxylic acids on polyoxometalate-catalysed three-phase liquid–liquid-gas reactions

In: Chemical Engineering Science 264 (2022), Article No.: 118143

ISSN: 0009-2509

DOI: 10.1016/j.ces.2022.118143 - , , , , , , , , , , , , :

Progress towards a realistic theoretical description of C60 photoelectron-momentum imaging experiments using time-dependent density-functional theory

In: Physical Review A - Atomic, Molecular, and Optical Physics 91 (2015), Article No.: 042514

ISSN: 1094-1622

DOI: 10.1103/PhysRevA.91.042514 - , , , , , , :

On electricity market equilibria with storage: Modeling, uniqueness, and a distributed ADMM

In: Computers & Operations Research 114 (2020)

ISSN: 0305-0548

DOI: 10.1016/j.cor.2019.104783

URL: https://www.sciencedirect.com/science/article/abs/pii/S0305054819302254?via=ihub - , , , , , , , , , , , :

Screening Nanographene-Mediated Inter(Porphyrin) Communication to Optimize Inter(Porphyrin–Fullerene) Forces

In: Advanced Energy Materials (2021)

ISSN: 1614-6832

DOI: 10.1002/aenm.202100158 - , , , , :

Tomographic single-shot time-resolved laser-induced incandescence for soot characterization in turbulent flames

In: Proceedings of the Combustion Institute 40 (2024), p. 105262

ISSN: 1540-7489

DOI: 10.1016/j.proci.2024.105262 - , :

Probing electron dynamics by IR+XUV pulses

In: European Physical Journal D 74 (2020), Article No.: 162

ISSN: 1434-6060

DOI: 10.1140/epjd/e2020-10053-4 - , , , , :

Estimating 3D kinematics and kinetics from virtual inertial sensor data through musculoskeletal movement simulations

In: Frontiers in Bioengineering and Biotechnology 12 (2024)

ISSN: 2296-4185

DOI: 10.3389/fbioe.2024.1285845 - , , , :

Design and Additive Manufacturing of 3D Phononic Band Gap Structures Based on Gradient Based Optimization

In: Materials 10 (2017)

ISSN: 1996-1944

DOI: 10.3390/ma10101125 - , , , , :

On the stability of hole states in molecules and clusters

In: European Physical Journal - Special Topics (2022)

ISSN: 1951-6355

DOI: 10.1140/epjs/s11734-022-00676-6 - , , , :

What should a metabolic energy model look like? Sensitivity of metabolic energy model parameters during gait

9th World Congress of Biomechanics 2022 Taipei (Taipei, 2022-07-10 - 2022-07-14) - , , :

Outer Approximation for Global Optimization of Mixed-Integer Quadratic Bilevel Problems

In: Mathematical Programming (2021)

ISSN: 0025-5610

DOI: 10.1007/s10107-020-01601-2 - , , , , :

Emission and collisional correlation in far-off equilibrium quantum systems

In: European Physical Journal D 75 (2021), Article No.: 121

ISSN: 1434-6060

DOI: 10.1140/epjd/s10053-021-00132-5 - , , , , , , :

Magic number colloidal clusters as minimum free energy structures

In: Nature Communications 9 (2018), Article No.: 5259

ISSN: 2041-1723

DOI: 10.1038/s41467-018-07600-4 - , :

Information content of the differences in the charge radii of mirror nuclei

In: Physical Review C - Nuclear Physics 105 (2022), Article No.: L021301

ISSN: 0556-2813

DOI: 10.1103/PhysRevC.105.L021301 - , , , , , :

Robust DC Optimal Power Flow with Modeling of Solar Power Supply Uncertainty via R-Vine Copulas

In: Optimization and Engineering (2022)

ISSN: 1389-4420

DOI: 10.1007/s11081-022-09761-0

URL: https://link.springer.com/article/10.1007/s11081-022-09761-0 - , , , , :

On the inclusion of dissipation on top of mean-field approaches

In: European Physical Journal B 91 (2018), Article No.: 246

ISSN: 1434-6028

DOI: 10.1140/epjb/e2018-90147-0 - , , :

Combined Theoretical Analysis of the Parity-Violating Asymmetry for Ca 48 and Pb 208

In: Physical Review Letters 129 (2022), Article No.: 232501

ISSN: 0031-9007

DOI: 10.1103/PhysRevLett.129.232501 - , , , , :

Prediction of the effect of midsole stiffness and energy return using trajectory optimisation

15th biennial Footwear Biomechanics Symposium (Online, 2021-07-21 - 2021-07-23)

In: Footwear Science 2021

DOI: 10.1080/19424280.2021.1917684

URL: https://www.mad.tf.fau.de/files/2023/05/FBS_2021_SoftvsEnergy_Submission_AOM_Declaration.pdf - , , , , , , :

Anchoring of porphyrins on atomically defined cobalt oxide: In-situ infrared spectroscopy at the electrified solid/liquid interface

In: Surface Science 718 (2022), Article No.: 122013

ISSN: 0039-6028

DOI: 10.1016/j.susc.2021.122013 - , , , :

Mass changes of the northern Antarctic Peninsula Ice Sheet derived from repeat bi-static synthetic aperture radar acquisitions for the period 2013–2017

In: Cryosphere 17 (2023), p. 4629-4644

ISSN: 1994-0416

DOI: 10.5194/tc-17-4629-2023 - , , , , :

Geodetic Mass Balance of the South Shetland Islands Ice Caps, Antarctica, from Differencing TanDEM-X DEMs

In: Remote Sensing 13 (2021), p. 3408

ISSN: 2072-4292

DOI: 10.3390/rs13173408 - , , , , , :

Investigation of Cycloparaphenylenes (CPPs) and their Noncovalent Ring-in-Ring and Fullerene-in-Ring Complexes by (Matrix-Assisted) Laser Desorption/Ionization and Density Functional Theory

In: Chemistry - A European Journal (2020)

ISSN: 0947-6539

DOI: 10.1002/chem.202001503

- , , , , :

New fluorogenic triacylglycerols as sensors for dynamic measurement of lipid oxidation

In: Analytical and Bioanalytical Chemistry (2024)

ISSN: 1618-2642

DOI: 10.1007/s00216-024-05642-w - , , , , :

Deep Learning for Jazz Walking Bass Transcription

In: Proceedings of the AES Conference on Semantic Audio, Erlangen, Germany: 2017 - , , , , , :

Conditional Random Fields for Improving Deep Learning-based Glacier Calving Front Delineations

International Geoscience and Remote Sensing Symposium 2023 (Pasadena, California, 2023-07-16 - 2023-07-21)

In: IGARSS 2023 - 2023 IEEE International Geoscience and Remote Sensing Symposium 2023

DOI: 10.1109/IGARSS52108.2023.10282915

URL: https://ieeexplore.ieee.org/document/10282915 - , , , , , , , , :

Tautomeric Equilibria of Nucleobases in the Hachimoji Expanded Genetic Alphabet

In: Journal of Chemical Theory and Computation 16 (2020), p. 2766-2777

ISSN: 1549-9618

DOI: 10.1021/acs.jctc.9b01079 - , , , , , , , :

Mode of Metal Ligation Governs Inhibition of Carboxypeptidase A

In: International Journal of Molecular Sciences 25 (2024), p. 13725

ISSN: 1422-0067

DOI: 10.3390/ijms252413725 - , , , , :

The Influence of Chemical Change on Protein Dynamics: A Case Study with Pyruvate Formate-Lyase

In: Chemistry - A European Journal (2019)

ISSN: 0947-6539

DOI: 10.1002/chem.201900663 - , , , , , , , , , :

21-Hydroxypregnane 21-O-malonylation, a crucial step in cardenolide biosynthesis, can be achieved by substrate-promiscuous BAHD-type phenolic glucoside malonyltransferases from Arabidopsis thaliana and homolog proteins from Digitalis lanata

In: Phytochemistry 187 (2021), Article No.: 112710

ISSN: 0031-9422

DOI: 10.1016/j.phytochem.2021.112710 - , , , :

Agonist binding and g protein coupling in histamine h2 receptor: A molecular dynamics study

In: International Journal of Molecular Sciences 21 (2020), p. 1-18

ISSN: 1422-0067

DOI: 10.3390/ijms21186693 - , , , , , , :

AMD-HookNet for Glacier Front Segmentation

In: IEEE Transactions on Geoscience and Remote Sensing 61 (2023), p. 1-12

ISSN: 0196-2892

DOI: 10.1109/TGRS.2023.3245419

URL: https://ieeexplore.ieee.org/document/10044700 - , , , , :

ANALYSIS OF EMBEDDED GPU ARCHITECTURES FOR AI IN NEUROMUSCULAR APPLICATIONS

In: IADIS International Journal on Computer Science and Information Systems 19 (2024), p. 1-14

ISSN: 1646-3692

DOI: 10.33965/ijcsis_2024190101

URL: http://www.iadisportal.org/ijcsis/papers/2024190101.pdf - , , , , , , :

Specific engineered G protein coupling to histamine receptors revealed from cellular assay experiments and accelerated molecular dynamics simulations

In: International Journal of Molecular Sciences 22 (2021), Article No.: 10047

ISSN: 1422-0067

DOI: 10.3390/ijms221810047 - , , , , :

Structure-based functional analysis of effector protein SifA in living cells reveals motifs important for Salmonella intracellular proliferation

In: International Journal of Medical Microbiology 308 (2018), p. 84-96

ISSN: 1438-4221

DOI: 10.1016/j.ijmm.2017.09.004 - , , , , :

Diffusion coefficients in binary electrolyte mixtures by dynamic light scattering and molecular dynamics simulations

In: Electrochimica Acta 462 (2023), Article No.: 142637

ISSN: 0013-4686

DOI: 10.1016/j.electacta.2023.142637 - , , , , :

Diffusivities in Binary Mixtures of Ammonia Dissolved in n-Hexane, 1-Hexanol, or Cyclohexane Determined by Dynamic Light Scattering and Molecular Dynamics Simulations

In: Journal of Chemical and Engineering Data 68 (2023), p. 2585–2598

ISSN: 0021-9568

DOI: 10.1021/acs.jced.3c00437 - , , :

Proportional and Simultaneous Real-Time Control of the Full Human Hand From High-Density Electromyography

In: IEEE Transactions on Neural Systems and Rehabilitation Engineering 31 (2023), p. 3118-3131

ISSN: 1534-4320

DOI: 10.1109/TNSRE.2023.3295060 - , , , , :

Mutual and Thermal Diffusivities in Binary Mixtures of n-Hexane or 1-Hexanol with Krypton, R143a, or Sulfur Hexafluoride by Using Dynamic Light Scattering and Molecular Dynamics Simulations

In: Journal of Chemical and Engineering Data 68 (2023), p. 1343-1357

ISSN: 0021-9568

DOI: 10.1021/acs.jced.3c00143 - , , , :

Diffusivities in Binary Mixtures of Cyclohexane or Ethyl Butanoate with Dissolved CH4 or R143a Close to Infinite Dilution

In: Journal of Chemical and Engineering Data 68 (2023), p. 339-348

ISSN: 0021-9568

DOI: 10.1021/acs.jced.2c00696 - , , , , :

Inovis: Instant Novel-View Synthesis

SIGGRAPH Asia 2023 Sydney (Sydney, 2023-12-12 - 2023-12-15)

In: Association for Computing Machinery (ed.): ACM SIGGRAPH Asia 2023 Conference Proceedings 2023

DOI: 10.1145/3610548.3618216

URL: https://reality.tf.fau.de/publications/2023/harrerfranke2023inovis - , , , , , , , :

Learning a Hand Model from Dynamic Movements Using High-Density EMG and Convolutional Neural Networks

In: IEEE Transactions on Biomedical Engineering (2024), p. 1-12

ISSN: 0018-9294

DOI: 10.1109/TBME.2024.3432800 - :

Learning Audio Representations for Cross-Version Retrieval of Western Classical Music (Dissertation, 2021)

URL: https://nbn-resolving.org/urn:nbn:de:bvb:29-opus4-167774 - , , , , :

In Silico Study of Camptothecin-Based Pro-Drugs Binding to Human Carboxylesterase 2

In: Biomolecules 14 (2024), Article No.: 153

ISSN: 2218-273X

DOI: 10.3390/biom14020153 - , , :

A Metadynamics-Based Protocol for the Determination of GPCR-Ligand Binding Modes

In: International Journal of Molecular Sciences 20 (2019)

ISSN: 1422-0067

DOI: 10.3390/ijms20081970 - , , , , , :

Probing the potential of CnaB-type domains for the design of tag/catcher systems

In: PLoS ONE 12 (2017)

ISSN: 1932-6203

DOI: 10.1371/journal.pone.0179740 - , , :

Towards Leitmotif Activity Detection in Opera Recordings

In: Transactions of the International Society for Music Information Retrieval 4 (2021), p. 127-140

ISSN: 2514-3298

DOI: 10.5334/tismir.116 - , , :

Binding of histamine to the H1 receptora molecular dynamics study

In: Journal of Molecular Modeling 24 (2018)

ISSN: 1610-2940

DOI: 10.1007/s00894-018-3873-7 - , , , , , :

Accurate Continuous Prediction of 14 Degrees of Freedom of the Hand from Myoelectrical Signals through Convolutive Deep Learning

44th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, EMBC 2022 (Scottish Event Campus, Glasgow, UK, 2022-07-11 - 2023-07-15)

DOI: 10.1109/EMBC48229.2022.9870937 - , , , , , , , , , :

Exploring Dataset Bias and Scaling Techniques in Multi-Source Gait Biomechanics: An Explainable Machine Learning Approach

In: ACM Transactions on Intelligent Systems and Technology 20 (2024), Article No.: 20

ISSN: 2157-6904

DOI: 10.1145/3702646 - , , , , , , , , :

Merging bioresponsive release of insulin-like growth factor I with 3D printable thermogelling hydrogels

In: Journal of Controlled Release 347 (2022), p. 115-126

ISSN: 0168-3659

DOI: 10.1016/j.jconrel.2022.04.028 - , , :

Effect of Ions and Sequence Variants on the Antagonist Binding Properties of the Histamine H1 Receptor

In: International Journal of Molecular Sciences 23 (2022), Article No.: 1420

ISSN: 1422-0067

DOI: 10.3390/ijms23031420 - , :

Probing the role of intercalating protein sidechains for kink formation in DNA

In: PLoS ONE 13 (2018)

ISSN: 1932-6203

DOI: 10.1371/journal.pone.0192605 - , , :

Oxidation Enhances Binding of Extrahelical 5-Methyl-Cytosines by Thymine DNA Glycosylase

In: Journal of Physical Chemistry B 126 (2022), p. 1188-1201

ISSN: 1520-6106

DOI: 10.1021/acs.jpcb.1c09896 - , , :

PoseBias: On Dataset Bias and Task Difficulty - Is there an Optimal Camera Position for Facial Image Analysis?

2023 IEEE/CVF International Conference on Computer Vision Workshops, ICCVW 2023 (Paris, 2023-10-02 - 2023-10-06)

In: 2023 IEEE/CVF International Conference on Computer Vision Workshops (ICCVW) 2023

DOI: 10.1109/ICCVW60793.2023.00334 - , , , , , , :

Dynamically Sampled Nonlocal Gradients for Stronger Adversarial Attacks

International Joint Conference on Neural Networks (IJCNN) (Online, 2021-07-18 - 2021-07-22)

DOI: 10.1109/ijcnn52387.2021.9534190 - , , , :

Molecular dynamics simulations of liquid ethane up to 298.15 K

In: Molecular Physics (2023), Article No.: e2211401

ISSN: 0026-8976

DOI: 10.1080/00268976.2023.2211401 - , , , :

Data-Driven Solo Voice Enhancement for Jazz Music Retrieval

In: Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP) 2017 - , , , , :

Exploring Reactive Conformations of Coenzyme A during Binding and Unbinding to Pyruvate Formate-Lyase

In: Journal of Physical Chemistry A (2019)

ISSN: 1520-5215

DOI: 10.1021/acs.jpca.9b06913 - , , , , , , , , , , , , , , , , , , :

A concept of dual-responsive prodrugs based on oligomerization-controlled reactivity of ester groups: an improvement of cancer cells versus neutrophils selectivity of camptothecin

In: RSC Medicinal Chemistry (2024)

ISSN: 2632-8682

DOI: 10.1039/d3md00609c - , , :

Interaction of Glycolipids with the Macrophage Surface Receptor Mincle - a Systematic Molecular Dynamics Study

In: Scientific Reports 8 (2018)

ISSN: 2045-2322

DOI: 10.1038/s41598-018-23624-8 - , , , , , , , , , , , , , :

N-Terminus to Arginine Side-Chain Cyclization of Linear Peptidic Neuropeptide y Y4Receptor Ligands Results in Picomolar Binding Constants

In: Journal of Medicinal Chemistry 64 (2021), p. 16746-16769

ISSN: 0022-2623

DOI: 10.1021/acs.jmedchem.1c01574 - , , , , , , , , , :

Structural and functional dissection reveals distinct roles of Ca2+-binding sites in the giant adhesin SiiE of Salmonella enterica

In: PLoS Pathogens 13 (2017), Article No.: e1006418

ISSN: 1553-7366

DOI: 10.1371/journal.ppat.1006418 - , , , , , , , , :

2'-Alkynyl spin-labelling is a minimally perturbing tool for DNA structural analysis

In: Nucleic Acids Research 48 (2020), p. 2830-2840

ISSN: 0305-1048

DOI: 10.1093/nar/gkaa086 - , , :

Singing Voice Detection in Opera Recordings: A Case Study on Robustness and Generalization

In: Electronics 10 (2021)

ISSN: 2079-9292

DOI: 10.3390/electronics10101214 - , , , , , , , , , , :

A novel D-amino acid peptide with therapeutic potential (ISAD1) inhibits aggregation of neurotoxic disease-relevant mutant Tau and prevents Tau toxicity in vitro

In: Alzheimer's Research and Therapy 14 (2022)

ISSN: 1758-9193

DOI: 10.1186/s13195-022-00959-z - , , , :

Influence of spatio-temporal filtering on hand kinematics estimation from high-density EMG signals

In: Journal of Neural Engineering 21 (2024), p. 026014

ISSN: 1741-2560

DOI: 10.1088/1741-2552/ad3498 - , , , :

Conformational Dynamics of Herpesviral NEC Proteins in Different Oligomerization States

In: International Journal of Molecular Sciences 19 (2018)

ISSN: 1422-0067

DOI: 10.3390/ijms19102908 - , , , , , , , , , :

Potent Tau Aggregation Inhibitor D-Peptides Selected against Tau-Repeat 2 Using Mirror Image Phage Display

In: ChemBioChem (2021)

ISSN: 1439-4227

DOI: 10.1002/cbic.202100287 - , , , :

Dilated deeply supervised networks for hippocampus segmentation in MRI

Workshop on Bildverarbeitung fur die Medizin, 2019 (Lübeck, 2019-03-17 - 2019-03-19)

In: Thomas M. Deserno, Andreas Maier, Christoph Palm, Heinz Handels, Klaus H. Maier-Hein, Thomas Tolxdorff (ed.): Informatik aktuell 2019

DOI: 10.1007/978-3-658-25326-4_18 - , , , , , , , :

Mitochondria-Catalyzed Activation of Anticancer Prodrugs

In: ChemCatChem (2025)

ISSN: 1867-3880

DOI: 10.1002/cctc.202500054 - , , , :

Identification of Spared and Proportionally Controllable Hand Motor Dimensions in Motor Complete Spinal Cord Injuries Using Latent Manifold Analysis

In: IEEE Transactions on Neural Systems and Rehabilitation Engineering 32 (2024), p. 3741-3750

ISSN: 1534-4320

DOI: 10.1109/TNSRE.2024.3472063 - , , , , :

xLength: Predicting Expected Ski Jump Length Shortly after Take-Off Using Deep Learning

In: Sensors 22 (2022), Article No.: 8474

ISSN: 1424-8220

DOI: 10.3390/s22218474 - , , :

Ansätze zur datengetriebenen Transkription einstimmiger Jazzsoli

In: Proceedings of the Deutsche Jahrestagung für Akustik (DAGA), München, Germany: 2018 - , , :

ArCSEM: Artistic Colorization of SEM Images via Gaussian Splatting

AI for Visual Arts Workshop and Challenges (AI4VA) in conjunction with European Conference on Computer Vision (ECCV) 2024, Milano, Italy (Mailand, 2024-09-29 - 2024-09-29)

Open Access: https://arxiv.org/pdf/2410.21310 - , :

CTC-Based Learning of Chroma Features for Score-Audio Music Retrieval

In: IEEE/ACM Transactions on Audio, Speech and Language Processing 29 (2021), p. 2957-2971

ISSN: 2329-9290

DOI: 10.1109/TASLP.2021.3110137 - , , , , , , , , , :

Superoleophilic Magnetic Iron Oxide Nanoparticles for Effective Hydrocarbon Removal from Water

In: Advanced Functional Materials (2019)

ISSN: 1616-301X

DOI: 10.1002/adfm.201805742

URL: https://onlinelibrary.wiley.com/doi/10.1002/adfm.201805742 - , , , :

Wearable Sensors for Activity Recognition in Ultimate Frisbee Using Convolutional Neural Networks and Transfer Learning

In: Sensors 22 (2022), p. 2560

ISSN: 1424-8220

DOI: 10.3390/s22072560 - , , , , , , , , , , , , , :

The remediation of nano-/microplastics from water

In: Materials Today (2021)

ISSN: 1369-7021

DOI: 10.1016/j.mattod.2021.02.020

URL: https://www.sciencedirect.com/science/article/pii/S1369702121000821 - , :

Alkali ion influence on structure and stability of fibrillar amyloid-beta oligomers

In: Journal of Molecular Modeling 25 (2019), Article No.: 37

ISSN: 1610-2940

DOI: 10.1007/s00894-018-3920-4 - , , , , , , , , , , :

Key interactions in the trimolecular complex consisting of the rheumatoid arthritis-associated DRB1*04:01 molecule, the major glycosylated collagen II peptide and the T-cell receptor

In: Annals of the Rheumatic Diseases (2022)

ISSN: 0003-4967

DOI: 10.1136/annrheumdis-2021-220500 - , , , , :

Spontaneous Membrane Nanodomain Formation in the Absence or Presence of the Neurotransmitter Serotonin

In: Frontiers in Cell and Developmental Biology 8 (2020), Article No.: 601145

ISSN: 2296-634X

DOI: 10.3389/fcell.2020.601145 - , , , , , , , , :

Chemical-recognition-driven selectivity of SnO2-nanowire-based gas sensors

In: Nano Today (2021)

ISSN: 1748-0132

DOI: 10.1016/j.nantod.2021.101265

URL: https://www.sciencedirect.com/science/article/abs/pii/S1748013221001900 - , , , , , , , , , , , , , :

Automated Video-Based Analysis Framework for Behavior Monitoring of Individual Animals in Zoos Using Deep Learning—A Study on Polar Bears

In: Animals 12 (2022), p. 692

ISSN: 2076-2615

DOI: 10.3390/ani12060692 - , , , , , , :

Mutations in the B.1.1.7 SARS-CoV-2 Spike Protein Reduce Receptor-Binding Affinity and Induce a Flexible Link to the Fusion Peptide

In: Biomedicines 9 (2021)

ISSN: 2227-9059

DOI: 10.3390/biomedicines9050525

URL: https://www.mdpi.com/2227-9059/9/5/525/xml - , , , , , , , , , :

Properties of Oligomeric Interaction of the Cytomegalovirus Core Nuclear Egress Complex (NEC) and Its Sensitivity to an NEC Inhibitory Small Molecule

In: Viruses 13 (2021)

ISSN: 1999-4915

DOI: 10.3390/v13030462 - , , , , , , , , , :

Structural and functional dissection reveals distinct roles of Ca2+-binding sites in the giant adhesin SiiE of Salmonella enterica

In: PLoS Pathogens 13 (2017), Article No.: e1006418

ISSN: 1553-7366

DOI: 10.1371/journal.ppat.1006418

URL: https://journals.plos.org/plospathogens/article?id=10.1371/journal.ppat.1006418 - , :

Using Weakly Aligned Score—Audio Pairs to Train Deep Chroma Models for Cross-Modal Music Retrieval

International Society for Music Information Retrieval Conference

DOI: 10.5281/zenodo.4245400 - , :

Learning low-dimensional embeddings of audio shingles for cross-version retrieval of classical music

In: Applied Sciences 10 (2020), Article No.: 19

ISSN: 2076-3417

DOI: 10.3390/app10010019 - , , :

Interaction of thymine dna glycosylase with oxidised 5-methyl-cytosines in their amino-and imino-forms

In: Molecules 26 (2021), Article No.: 5728

ISSN: 1420-3049

DOI: 10.3390/molecules26195728 - , , , :

Diffusivities in Binary Mixtures of n-Decane, n-Hexadecane, n-Octacosane, 2-Methylpentane, 2,2-Dimethylbutane, Cyclohexane, Benzene, Ethanol, 1-Decanol, Ethyl Butanoate, or n-Hexanoic Acid with Dissolved He or Kr Close to Infinite Dilution

In: Journal of Chemical and Engineering Data 67 (2022), p. 622-635

ISSN: 0021-9568

DOI: 10.1021/acs.jced.1c00922 - , , , , , , :

Out-of-the-box Calving Front Detection Method Using Deep Learning

In: Cryosphere 17 (2023), p. 4957–4977

ISSN: 1994-0416

DOI: 10.5194/tc-17-4957-2023 - , , , , , , , , , , , :

Tailored solution‐based N‐heterotriangulene thin films: Unravelling the self‐assembly

In: ChemPhysChem (2021), Article No.: cphc.202100164

ISSN: 1439-4235

DOI: 10.1002/cphc.202100164 - , :

Characterization of a lipid nanoparticle for mRNA delivery using molecular dynamics

In: EUROPEAN BIOPHYSICS JOURNAL WITH BIOPHYSICS LETTERS, NEW YORK: 2021 - , , , , , , :

Computational decomposition reveals reshaping of the SARS‐CoV‐2–ACE2 interface among viral variants expressing the N501Y mutation

In: Journal of Cellular Biochemistry (2021)

ISSN: 0730-2312

DOI: 10.1002/jcb.30142 - , , :

Role of the N-terminus for the stability of an amyloid-β fibril with three-fold symmetry

In: PLoS ONE 12 (2017)

ISSN: 1932-6203

DOI: 10.1371/journal.pone.0186347 - , :

mRNA lipid nanoparticle phase transition

In: Biophysical Journal (2022)

ISSN: 0006-3495

DOI: 10.1016/j.bpj.2022.08.037 - , , , , :

Molecular dynamics simulations of the delta and omicron SARS-CoV-2 spike – ACE2 complexes reveal distinct changes between both variants

In: Computational and Structural Biotechnology Journal 20 (2022), p. 1168-1176

ISSN: 2001-0370

DOI: 10.1016/j.csbj.2022.02.015

- , , , , , , :

Influence of dissolved hydrogen on the viscosity and interfacial tension of the liquid organic hydrogen carrier system based on diphenylmethane by surface light scattering and molecular dynamics simulations

In: International Journal of Hydrogen Energy 47 (2022), p. 39163-39178

ISSN: 0360-3199

DOI: 10.1016/j.ijhydene.2022.09.078 - , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , , :

Benefits from using mixed precision computations in the ELPA-AEO and ESSEX-II eigensolver projects

In: Japan Journal of Industrial and Applied Mathematics (2019)

ISSN: 0916-7005

DOI: 10.1007/s13160-019-00360-8 - , , , , , , , , , :

Insights from molecular dynamics simulations on structural organization and diffusive dynamics of an ionic liquid at solid and vacuum interfaces

In: Journal of Colloid and Interface Science 553 (2019), p. 350-363

ISSN: 0021-9797

DOI: 10.1016/j.jcis.2019.06.017 - , , , :

Viscosity and Interfacial Tension of Binary Mixtures Consisting of an n-Alkane, Branched Alkane, Primary Alcohol, or Branched Alcohol and a Dissolved Gas Using Equilibrium Molecular Dynamics Simulations

In: International Journal of Thermophysics 43 (2022), Article No.: ARTN 112

ISSN: 0195-928X

DOI: 10.1007/s10765-022-03038-5 - , , , , , , , , , , , , :

Surface chemistry of 2,3-dibromosubstituted norbornadiene/quadricyclane as molecular solar thermal energy storage system on Ni(111)

In: Journal of Chemical Physics 150 (2019), Article No.: 184706

ISSN: 0021-9606

DOI: 10.1063/1.5095583 - , , , , , , , , :

CuTe chains on Cu(111) by deposition of one-third of a monolayer of Te: Atomic and electronic structure

In: Physical Review B 102 (2020), Article No.: 155422

ISSN: 0163-1829

DOI: 10.1103/PhysRevB.102.155422 - , , , , :

MPC and CoArray Fortran: Alternatives to classic MPI implementations on the examples of scalable lattice boltzmann flow solvers

15th Results and Review Workshop on High Performance Computing in Science and Engineering, HLRS 2012 (Stuttgart)

DOI: 10.1007/978-3-642-33374-3_27 - , , , , :

Interfacial Tension and Liquid Viscosity of Binary Mixtures of n-Hexane, n-Decane, or 1-Hexanol with Carbon Dioxide by Molecular Dynamics Simulations and Surface Light Scattering

In: International Journal of Thermophysics 40 (2019), Article No.: 79

ISSN: 0195-928X

DOI: 10.1007/s10765-019-2544-y - , , , , , :

Thermal, Mutual, and Self-Diffusivities of Binary Liquid Mixtures Consisting of Gases Dissolved in n-Alkanes at Infinite Dilution

In: Journal of Physical Chemistry B 122 (2018), p. 3163-3175

ISSN: 1520-6106

DOI: 10.1021/acs.jpcb.8b00733 - , , , , , , :

Fully Resolved Simulations of Dune Formation in Riverbeds

In: Kunkel JM, Yokota R, Balaji P, Keyes D (ed.): High Performance Computing: 32nd International Conference, ISC High Performance 2017, Frankfurt, Germany, June 18-22, 2017, Proceedings, Cham: Springer International Publishing, 2017, p. 3-21

ISBN: 978-3-319-58667-0

DOI: 10.1007/978-3-319-58667-0_1 - , , :

Adsorption, self-assembly and self-metalation of tetra-cyanophenyl porphyrins on semiconducting CoO(100) films

In: Surface Science 720 (2022), Article No.: 122044

ISSN: 0039-6028

DOI: 10.1016/j.susc.2022.122044 - , , , , :

Mutual and Thermal Diffusivities in Mixtures of Cyclohexane, n-Hexadecane, n-Octacosane, or n-Hexanoic Acid with Carbon Dioxide Obtained by Dynamic Light Scattering and Molecular Dynamics Simulations

In: Journal of Chemical and Engineering Data 67 (2022), p. 3059-3076

ISSN: 0021-9568

DOI: 10.1021/acs.jced.2c00487 - , , , , , :

Lattice Boltzmann methods in porous media simulations: From laminar to turbulent flow

In: Computers & Fluids 140 (2016), p. 247-259

ISSN: 0045-7930

DOI: 10.1016/j.compfluid.2016.10.007

URL: http://www.sciencedirect.com/science/article/pii/S0045793016303061 - , , , :

Modeling and analyzing performance for highly optimized propagation steps of the lattice Boltzmann method on sparse lattices

(2014)

Open Access: http://arxiv.org/abs/1410.0412

URL: https://arxiv.org/abs/1410.0412 - , , :

Aero-Vibro-Acoustic Wind Noise-Simulation Based on the Flow around a Car

In: SAE Technical Papers (2016)

ISSN: 0148-7191

DOI: 10.4271/2016-01-1804 - , , :

EvoStencils: a grammar-based genetic programming approach for constructing efficient geometric multigrid methods

In: Genetic Programming and Evolvable Machines (2021)

ISSN: 1389-2576

DOI: 10.1007/s10710-021-09412-w

URL: http://link.springer.com/article/10.1007/s10710-021-09412-w - , , , , , :

What does Power Consumption Behavior of HPC Jobs Reveal?: Demystifying, Quantifying, and Predicting Power Consumption Characteristics

34th IEEE International Parallel and Distributed Processing Symposium, IPDPS 2020 (New Orleans, LA, 2020-05-18 - 2020-05-22)

In: Proceedings - 2020 IEEE 34th International Parallel and Distributed Processing Symposium, IPDPS 2020 2020

DOI: 10.1109/IPDPS47924.2020.00087 - , , , :

Comparison of Different Propagation Steps for Lattice Boltzmann Methods

In: Computers & Mathematics with Applications 65 (2013), p. 924-935

ISSN: 0898-1221

DOI: 10.1016/j.camwa.2012.05.002

URL: http://www.sciencedirect.com/science/article/pii/S0898122112003835 - , :

Fracture toughness and bond trapping of grain boundary cracks

In: Acta Materialia 73 (2014), p. 1-11

ISSN: 1359-6454

DOI: 10.1016/j.actamat.2014.03.035 - , , :

A particle‐continuum coupling method for multiscale simulations of viscoelastic‐viscoplastic amorphous glassy polymers

In: International Journal for Numerical Methods in Engineering (2021)

ISSN: 0029-5981

DOI: 10.1002/nme.6836 - , , , , :

A Ferrocene-Based Dicationic Iron(IV) Carbonyl Complex

In: Angewandte Chemie International Edition 57 (2018), p. 14597-14601

ISSN: 1433-7851

DOI: 10.1002/anie.201809464 - , , , , :

The Influence of Chemical Change on Protein Dynamics: A Case Study with Pyruvate Formate-Lyase

In: Chemistry - A European Journal (2019)

ISSN: 0947-6539

DOI: 10.1002/chem.201900663 - , , , , :

Dynamic Cholesterol-Conditioned Dimerization of the G Protein Coupled Chemokine Receptor Type 4.

In: PLoS Computational Biology 12 (2016), p. e1005169

ISSN: 1553-734X

DOI: 10.1371/journal.pcbi.1005169 - , , , :

Numerical study of plasmonic absorption enhancement in semiconductor absorbers by metallic nanoparticles

In: Journal of Applied Physics 120 (2016), Article No.: 113102

ISSN: 1089-7550

DOI: 10.1063/1.4962459 - , , , , , , , , , , , , , , , , , , , , , , , , , :

The ice‐free topography of Svalbard

In: Geophysical Research Letters 45 (2018), p. 11760-11769

ISSN: 0094-8276

DOI: 10.1029/2018GL079734

URL: https://agupubs.onlinelibrary.wiley.com/action/showCitFormats?doi=10.1029/2018GL079734 - , , , :

Aerodynamic impact of the ventricular folds in computational larynx models

In: Journal of the Acoustical Society of America 145 (2019), p. 2376-2387

ISSN: 0001-4966

DOI: 10.1121/1.5098775 - , , , , , :

Aeroacoustic analysis of the human phonation process based on a hybrid acoustic PIV approach

In: Experiments in Fluids 59 (2018)

ISSN: 0723-4864

DOI: 10.1007/s00348-017-2469-9

URL: https://link.springer.com/article/10.1007/s00348-017-2469-9/fulltext.html - , , , , :

Viscosity, Interfacial Tension, and Density of Binary-Liquid Mixtures of n-Hexadecane with n-Octacosane, 2,2,4,4,6,8,8-Heptamethylnonane, or 1-Hexadecanol at Temperatures between 298.15 and 573.15 K by Surface Light Scattering and Equilibrium Molecular Dynamics Simulations

In: Journal of Chemical and Engineering Data 66 (2021), p. 2264-2280

ISSN: 0021-9568

DOI: 10.1021/acs.jced.1c00108 - , , , , , , , :

Liquid Viscosity and Surface Tension of n-Hexane, n-Octane, n-Decane, and n-Hexadecane up to 573 K by Surface Light Scattering

In: Journal of Chemical and Engineering Data 64 (2019), p. 4116-4131

ISSN: 0021-9568

DOI: 10.1021/acs.jced.9b00525 - , , , , , :

Towards a clinically applicable computational larynx model

In: Applied Sciences 9 (2019), Article No.: 2288

ISSN: 2076-3417

DOI: 10.3390/app9112288 - , , , , , :

PGAS implementation of SpMVM and LBM with GPI

The 7th International Conference on PGAS Programming Models (Edinburgh, Scotland, UK)

In: Proceedings of the 7th International Conference on PGAS Programming Models, Edinburgh: 2013 - , , , , , , , , :

Synthesis and Characterization of Gelatin-Based Magnetic Hydrogels

In: Advanced Functional Materials 24 (2014), p. 3187-3196

ISSN: 1616-301X

DOI: 10.1002/adfm.201303547 - , , , , , , :

Characterization of Long Linear and Branched Alkanes and Alcohols for Temperatures up to 573.15 K by Surface Light Scattering and Molecular Dynamics Simulations

In: Journal of Physical Chemistry B 124 (2020), p. 4146-4163

ISSN: 1520-6106

DOI: 10.1021/acs.jpcb.0c01740 - , , :

Submonolayer copper telluride phase on Cu(111): Ad-chain and trough formation

In: Physical Review B 104 (2021), Article No.: A53

ISSN: 0163-1829

DOI: 10.1103/PhysRevB.104.155426 - , , , , , :

An Isolable Terminal Imido Complex of Palladium and Catalytic Implications

In: Angewandte Chemie International Edition (2018)

ISSN: 1433-7851

DOI: 10.1002/anie.201809152 - , , , , :

Fick Diffusion Coefficient in Binary Mixtures of [HMIM][NTf2] and Carbon Dioxide by Dynamic Light Scattering and Molecular Dynamics Simulations

In: Journal of Physical Chemistry B 125 (2021), p. 5100-5113

ISSN: 1520-6106

DOI: 10.1021/acs.jpcb.1c01616 - , , , , :

Lattice Boltzmann benchmark kernels as a testbed for performance analysis

In: Computers & Fluids 172 (2018), p. 582-592

ISSN: 0045-7930

DOI: 10.1016/j.compfluid.2018.03.030 - , , , , , , , , :

Surface Reaction of CO on Carbide-Modified Mo(110)

In: Journal of Physical Chemistry C 121 (2017), p. 3133-3142

ISSN: 1932-7447

DOI: 10.1021/acs.jpcc.6b11950 - , , , , :

Opening the Black Box: Performance Estimation during Code Generation for GPUs

IEEE 33rd International Symposium on Computer Architecture and High Performance Computing (Belo Horizonte – Brazil, 2021-10-26 - 2021-10-28)

DOI: 10.1109/sbac-pad53543.2021.00014 - , , , , , , , , :

Diffusivities in Binary Mixtures of [AMIM][NTf2] Ionic Liquids with the Dissolved Gases H2, He, N2, CO, CO2, or Kr Close to Infinite Dilution

In: Journal of Chemical and Engineering Data 65 (2020), p. 4116–4129

ISSN: 0021-9568

DOI: 10.1021/acs.jced.0c00430 - , , , :

The Influence of Tropical Cyclones on Circulation, Moisture Transport, and Snow Accumulation at Kilimanjaro During the 2006–2007 Season

In: Journal of Geophysical Research: Atmospheres (2019)

ISSN: 2169-897X

DOI: 10.1029/2019JD030682 - , , , , , , , , , , :

On the energetics of conformational switching of molecules at and close to room temperature

In: Journal of the American Chemical Society 136 (2014), p. 1609-1616

ISSN: 0002-7863

DOI: 10.1021/ja411884p - , , , , , :

Propagation of Holes and Electrons in Metal-Organic Frameworks

In: Journal of Chemical Information and Modeling (2019)

ISSN: 1549-9596

DOI: 10.1021/acs.jcim.9b00461 - , , , , , :

Revealing the Effects of Weak Surfactants on the Dynamics of Surface Fluctuations by Surface Light Scattering

In: Journal of Physical Chemistry B 127 (2023), p. 10647-10658

ISSN: 1520-6106

DOI: 10.1021/acs.jpcb.3c06309 - , , , :

Asynchronous Checkpointing by Dedicated Checkpoint Threads

In: Recent Advances in the Message Passing Interface, -: Springer-verlag, 2012, p. 289-290 (Lecture Notes in Computer Science, Vol.7490)

ISBN: 978-3-642-33517-4

DOI: 10.1007/978-3-642-33518-1_36

URL: http://link.springer.com/chapter/10.1007/978-3-642-33518-1_36 - , , , :

3Dscript.server: true server-side 3D animation of microscopy images using a natural language-based syntax

In: Bioinformatics 37 (2021), p. 4901-4902

ISSN: 1367-4803

DOI: 10.1093/bioinformatics/btab462 - , , , :

Water in an electric field does not dance alone: The relation between equilibrium structure, time dependent viscosity and molecular motions

In: Journal of Molecular Liquids 282 (2019), p. 303-315

ISSN: 0167-7322

DOI: 10.1016/j.molliq.2019.02.055 - , , , , , :

CRAFT: A library for easier application-level Checkpoint/Restart and Automatic Fault Tolerance

In: IEEE Transactions on Parallel and Distributed Systems (2018)

ISSN: 1045-9219

DOI: 10.1109/TPDS.2018.2866794

URL: https://ieeexplore.ieee.org/document/8444763 - , , , , , :

Fluid-structure-interaction simulations of forming-air impact thermoforming

In: Polymer Engineering and Science (2022)

ISSN: 0032-3888

DOI: 10.1002/pen.25926 - , , , , :

Viscosity and Interfacial Tension of Ternary Mixtures Consisting of Linear Alkanes, Alcohols, and/or Dissolved Gases Using Surface Light Scattering and Equilibrium Molecular Dynamics Simulations

In: International Journal of Thermophysics 43 (2022), Article No.: ARTN 116

ISSN: 0195-928X

DOI: 10.1007/s10765-022-03040-x - , , , , , , , :

Achieving Highly Durable Random Alloy Nanocatalysts through Intermetallic Cores

In: ACS nano 13 (2019), p. 4008-4017

ISSN: 1936-0851

DOI: 10.1021/acsnano.8b08007 - , , , , , , :

Effect of lattice mismatch and shell thickness on strain in core@shell nanocrystals

In: Nanoscale Advances (2020)

ISSN: 2516-0230

DOI: 10.1039/D0NA00061B - , , , , , , , :

Viscosity and Interfacial Tension of Binary Mixtures Consisting of Linear, Branched, Cyclic, or Oxygenated Hydrocarbons with Dissolved Gases Using Surface Light Scattering and Equilibrium Molecular Dynamics Simulations

In: International Journal of Thermophysics 43 (2022), Article No.: 88

ISSN: 0195-928X

DOI: 10.1007/s10765-022-03012-1 - , , :

Analytic Modeling of Idle Waves in Parallel Programs: Communication, Cluster Topology, and Noise Impact

36th International Conference on High Performance Computing, ISC High Performance 2021 (Virtual, Online, 2021-06-24 - 2021-07-02)

In: Bradford L. Chamberlain, Bradford L. Chamberlain, Ana-Lucia Varbanescu, Hatem Ltaief, Piotr Luszczek (ed.): Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics) 2021

DOI: 10.1007/978-3-030-78713-4_19 - , , , :

Multi-material design optimization of optical properties of particulate products by discrete dipole approximation and sequential global programming

In: Structural and Multidisciplinary Optimization 66 (2023), Article No.: 5

ISSN: 1615-147X

DOI: 10.1007/s00158-022-03376-w - , , , :

Particle‐resolved turbulent flow in a packed bed: RANS, LES, and DNS simulations

In: AIChE Journal (2022)

ISSN: 0001-1541

DOI: 10.1002/aic.17615 - , , :

Efficient optical simulation of nano structures in thin-film solar cells

DOI: 10.1117/12.2312545 - , , , , , , , , , , :

Thermophysical properties of diphenylmethane and dicyclohexylmethane as a reference liquid organic hydrogen carrier system from experiments and molecular simulations

In: International Journal of Hydrogen Energy 45 (2020), p. 28903-28919

ISSN: 0360-3199

DOI: 10.1016/j.ijhydene.2020.07.261 - , , , , :

An Evaluation of Different I/O Techniques for Checkpoint/Restart

2013 IEEE 27th International Parallel and Distributed Processing Symposium Workshops & PhD Forum (Boston, MA, USA, 2013-05-20 - 2013-05-24)

In: Parallel and Distributed Processing Symposium Workshops PhD Forum (IPDPSW), 2013 IEEE 27th International, n.a.: 2013

DOI: 10.1109/IPDPSW.2013.145 - , , , , , :

A survey of checkpoint/restart techniques on distributed memory systems

In: Parallel Processing Letters 23 (2013), p. 1340011-1340030

ISSN: 0129-6264

DOI: 10.1142/S0129626413400112

URL: http://www.worldscientific.com/doi/abs/10.1142/S0129626413400112 - , , , , , , , , , , , , , , , :

Catalytically Triggered Energy Release from Strained Organic Molecules: The Surface Chemistry of Quadricyclane and Norbornadiene on Pt(111)

In: Chemistry - A European Journal 23 (2017), p. 1613-1622

ISSN: 0947-6539

DOI: 10.1002/chem.201604443 - , , , , , , :

2D-dwell-time analysis with simulations of ion-channel gating using high-performance computing.

In: Biophysical Journal (2023)

ISSN: 0006-3495

DOI: 10.1016/j.bpj.2023.02.023 - , , :

Quantum Criticality of Two-Dimensional Quantum Magnets with Long-Range Interactions

In: Physical Review Letters 122 (2019), Article No.: 017203

ISSN: 0031-9007

DOI: 10.1103/PhysRevLett.122.017203 - , , , :

Self-diffusion coefficient and viscosity of methane and carbon dioxide via molecular dynamics simulations based on new ab initio-derived force fields

In: Fluid Phase Equilibria 481 (2019), p. 15-27

ISSN: 0378-3812

DOI: 10.1016/j.fluid.2018.10.011 - , , , , :

Fick diffusion coefficients of binary fluid mixtures consisting of methane, carbon dioxide, and propane via molecular dynamics simulations based on simplified pair-specific ab initio-derived force fields

In: Fluid Phase Equilibria 502 (2019), Article No.: 112257

ISSN: 0378-3812

DOI: 10.1016/j.fluid.2019.112257 - , , , , :

Exploring Reactive Conformations of Coenzyme A during Binding and Unbinding to Pyruvate Formate-Lyase

In: Journal of Physical Chemistry A (2019)

ISSN: 1520-5215

DOI: 10.1021/acs.jpca.9b06913 - , , :

A graded interphase enhanced phase-field approach for modeling fracture in polymer composites

In: Forces in Mechanics 9 (2022), Article No.: 100135

ISSN: 2666-3597

DOI: 10.1016/j.finmec.2022.100135 - , , , :

Enhancement of the predictive power of molecular dynamics simulations for the determination of self-diffusion coefficient and viscosity demonstrated for propane

In: Fluid Phase Equilibria 496 (2019), p. 69-79

ISSN: 0378-3812

DOI: 10.1016/j.fluid.2019.05.019 - , , , , , , , , , , , :

Comparative study of the carbide-modified surfaces C/Mo(110) and C/Mo(100) using high-resolution x-ray photoelectron spectroscopy

In: Physical Review B 92 (2015), p. 014114

ISSN: 0163-1829

DOI: 10.1103/PhysRevB.92.014114 - , , , , , , :

Diffusivities in Binary Mixtures of n-Hexane or 1-Hexanol with Dissolved CH4, Ne, Kr, R143a, SF6, or R236fa Close to Infinite Dilution

In: Journal of Chemical and Engineering Data 66 (2021), p. 2218-2232

ISSN: 0021-9568

DOI: 10.1021/acs.jced.1c00084 - , :

Particle Shape Control via Etching of Core@Shell Nanocrystals

In: ACS nano 12 (2018), p. 9186-9195

ISSN: 1936-0851

DOI: 10.1021/acsnano.8b03759 - , , , , , , :

Building a Fault Tolerant Application Using the GASPI Communication Layer

the 1st International Workshop on Fault-Tolerant Systems (Chicago, IL, 2015-09-08 - 2015-09-11)

In: Proceedings of FTS 2015, in conjunction with IEEE Cluster 2015: 2015

DOI: 10.1109/CLUSTER.2015.106 - , :

The Capriccio method: a scale bridging approach for polymers extended towards inelasticity

In: Proceedings in Applied Mathematics and Mechanics 20 (2021)

ISSN: 1617-7061

DOI: 10.1002/pamm.202000301 - , , , , :

Prominent Midlatitude Circulation Signature in High Asia's Surface Climate During Monsoon

In: Journal of Geophysical Research: Atmospheres (2017)

ISSN: 2169-897X - , , , , , :

Size-Dependent Local Ordering in Melanin Aggregates and Its Implication on Optical Properties

In: Journal of Physical Chemistry A (2019)

ISSN: 1520-5215

DOI: 10.1021/acs.jpca.9b08722 - , :

How to tame a palladium terminal imido

In: Journal of Organometallic Chemistry 864 (2018), p. 26-36

ISSN: 0022-328X

DOI: 10.1016/j.jorganchem.2017.12.034 - , , , :

Adsorption and self-assembly of porphyrins on ultrathin CoO films on Ir(100)

In: Beilstein Journal of Nanotechnology 11 (2020), p. 1516-1524

ISSN: 2190-4286

DOI: 10.3762/bjnano.11.134 - , , , , , :

Influence of Liquid Structure on Fickian Diffusion in Binary Mixtures of n-Hexane and Carbon Dioxide Probed by Dynamic Light Scattering, Raman Spectroscopy, and Molecular Dynamics Simulations

In: Journal of Physical Chemistry B 122 (2018), p. 7122-7133

ISSN: 1520-6106

DOI: 10.1021/acs.jpcb.8b03568 - , , , , , :

Electronic structure of tetraphenylporphyrin layers on Ag(100)

In: Physical Review B 95 (2017), p. 115414

ISSN: 0163-1829

DOI: 10.1103/PhysRevB.95.115414

URL: http://link.aps.org/doi/10.1103/PhysRevB.95.115414 - , , , , , , , , , , , :

Molecular docking sites designed for the generation of highly crystalline covalent organic frameworks

In: Nature Chemistry 8 (2016), p. 310-316

ISSN: 1755-4330

DOI: 10.1038/NCHEM.2444 - , :

A comparative study of fluid-particle coupling methods for fully resolved lattice Boltzmann simulations

In: Computers & Fluids (2017), p. 74-89

ISSN: 0045-7930

DOI: 10.1016/j.compfluid.2017.05.033

URL: http://www.sciencedirect.com/science/article/pii/S0045793017302086 - , , , :

Validation and calibration of coupled porous-medium and free-flow problems using pore-scale resolved models

In: Computational Geosciences (2020)

ISSN: 1420-0597

DOI: 10.1007/s10596-020-09994-x

URL: https://link.springer.com/article/10.1007/s10596-020-09994-x - , , , :

Binding Characteristics of Sphingosine-1-Phosphate to ApoM hints to Assisted Release Mechanism via the ApoM Calyx-Opening.

In: Scientific Reports 6 (2016), p. 30655

ISSN: 2045-2322

DOI: 10.1038/srep30655 - , , , , , , :

Mutual and Thermal Diffusivities as Well as Fluid-Phase Equilibria of Mixtures of 1-Hexanol and Carbon Dioxide

In: Journal of Physical Chemistry B 124 (2020), p. 2482-2494

ISSN: 1520-6106

DOI: 10.1021/acs.jpcb.0c00646 - , , , , , , , , , , , , , , :

An Electrically Conducting Three-Dimensional Iron-Catecholate Porous Framework

In: Angewandte Chemie International Edition (2021)

ISSN: 1433-7851

DOI: 10.1002/anie.202102670 - , , , , :

Computational Models of Laryngeal Aerodynamics: Potentials and Numerical Costs

In: Journal of Voice 33 (2019), p. 385-400

ISSN: 0892-1997

DOI: 10.1016/j.jvoice.2018.01.001 - , , , , , , , , , :

Superoleophilic Magnetic Iron Oxide Nanoparticles for Effective Hydrocarbon Removal from Water

In: Advanced Functional Materials (2019)

ISSN: 1616-301X

DOI: 10.1002/adfm.201805742

URL: https://onlinelibrary.wiley.com/doi/10.1002/adfm.201805742 - , , , , , , , , , , , , , :

The remediation of nano-/microplastics from water

In: Materials Today (2021)

ISSN: 1369-7021

DOI: 10.1016/j.mattod.2021.02.020

URL: https://www.sciencedirect.com/science/article/pii/S1369702121000821 - , , , :

Modular Approach to Kekulé Diradicaloids Derived from Cyclic (Alkyl)(amino)carbenes

In: Journal of the American Chemical Society 140 (2018), p. 2546 - 2554

ISSN: 1520-5126

DOI: 10.1021/jacs.7b11183 - , , , , , , , , , :

Atom probe informed simulations of dislocation-precipitate interactions reveal the importance of local interface curvature

In: Acta Materialia 92 (2015), p. 33-45

ISSN: 1359-6454

DOI: 10.1016/j.actamat.2015.03.050 - , :

Alkali ion influence on structure and stability of fibrillar amyloid-beta oligomers

In: Journal of Molecular Modeling 25 (2019), Article No.: 37

ISSN: 1610-2940

DOI: 10.1007/s00894-018-3920-4 - , , , , , , , , , , , , , , , :

Effect of the degree of hydrogenation on the viscosity, surface tension, and density of the liquid organic hydrogen carrier system based on diphenylmethane

In: International Journal of Hydrogen Energy 47 (2022), p. 6111-6130

ISSN: 0360-3199

DOI: 10.1016/j.ijhydene.2021.11.198 - :

Pushing Electrons - Which Carbene Ligand for Which Application?

In: Organometallics 37 (2018), p. 275-289

ISSN: 0276-7333

DOI: 10.1021/acs.organomet.7b00720 - , , , , , , :

Diffusivities in 1-alcohols containing dissolved H2, He, N2, CO, or CO2 close to infinite dilution

In: Journal of Physical Chemistry B 123 (2019), p. 8777–8790

ISSN: 1520-6106

DOI: 10.1021/acs.jpcb.9b06211 - , :

Rupture of graphene sheets with randomly distributed defects

In: AIMS Materials Science 3 (2016), p. 1340-1349

ISSN: 2372-0468

DOI: 10.3934/matersci.2016.4.1340 - , , , , , , , , , , :

WALBERLA: A block-structured high-performance framework for multiphysics simulations

In: Computers & Mathematics with Applications (2020)

ISSN: 0898-1221

DOI: 10.1016/j.camwa.2020.01.007 - , , , , , , :

Viscosity and Interfacial Tension of Binary Mixtures of n-Hexadecane with Dissolved Gases Using Surface Light Scattering and Equilibrium Molecular Dynamics Simulations

In: Journal of Chemical and Engineering Data 66 (2021), p. 3205-3218

ISSN: 0021-9568

DOI: 10.1021/acs.jced.1c00289 - :

How to tame a palladium terminal oxo

In: Journal of Chemical Sciences 9 (2018), p. 1155-1167

ISSN: 0974-3626

DOI: 10.1039/c7sc05034h - , , , , , , , , :

Chemical-recognition-driven selectivity of SnO2-nanowire-based gas sensors

In: Nano Today (2021)

ISSN: 1748-0132

DOI: 10.1016/j.nantod.2021.101265

URL: https://www.sciencedirect.com/science/article/abs/pii/S1748013221001900 - , , , , :

Chip-level and multi-node analysis of energy-optimized lattice Boltzmann CFD simulations

In: Concurrency and Computation-Practice & Experience (2015), p. 1-5